KNN CLASSIFIER, INTRODUCTION TO K-NEAREST NEIGHBOR ALGORITHM

Most of the machine learning algorithms are parametric. What do we mean by parametric? Let’s say if we are trying to model an linear regression model with one dependent variable and one independent variable. The best fit we are looking is the line equations with optimized parameters.

The parameters could be the intercept and coefficient. For any classification algorithm, we will try to get a boundary. Which successfully separates different target classes.

Let’s say for support vector machine we will try to find the margins and the support vectors. In this case, also we will have some set of parameters. Which needs to be optimized to get decent accuracy.

But today we are going to learn a different kind of algorithm which is non-parametric classification algorithm. Thinking how we can model such algorithm.

Let’s walk through this post to get know how we can do that. Just to give you one line summary.

The algorithm uses the neighbor points information to predict the target class.

Before we drive further let’s look at the table of contents.

Table of contents

- Introduction to k-nearest neighbor classifier

- K- nearest neighbor classification step by step procedure.

- Knn applications

- K-nearest neighbor (knn) algorithm pseudocode

- K-nearest neighbor algorithm example

- How to choose the k value

- Condensed nearest neighbor data reduction rule

- K-Nearest Neighbor case study

- Advantages of k-nearest neighbors algorithm

- Limitation to k-nearest neighbors algorithm

- References

- Related Courses

Introduction to K-nearest neighbor classifier

K-nearest neighbor classifier is one of the introductory supervised classifier, which every data science learner should be aware of. Fix & Hodges proposed K-nearest neighbor classifier algorithm in the year of 1951 for performing pattern classification task.

For simplicity, this classifier is called as Knn Classifier. To be surprised k-nearest neighbor classifier mostly represented as Knn, even in many research papers too. Knn address the pattern recognition problems and also the best choices for addressing some of the classification related tasks.

The simple version of the K-nearest neighbor classifier algorithms is to predict the target label by finding the nearest neighbor class. The closest class will be identified using the distance measures like Euclidean distance.

K-nearest neighbor classification step by step procedure

Before diving into the k-nearest neighbor, classification process lets’s understand the application-oriented example where we can use the knn algorithm.

Knn classification application

Let’s assume a money lending company “XYZ” like UpStart, IndiaLends, etc. Money lending XYZ company is interested in making the money lending system comfortable & safe for lenders as well as for borrowers. The company holds a database of customer’s details.

Using customer’s detailed information from the database, it will calculate a credit score(discrete value) for each customer. The calculated credit score helps the company and lenders to understand the credibility of a customer clearly. So they can simply take a decision whether they should lend money to a particular customer or not.

The customer’s details could be:

- Educational background details.

- Highest graduated degree.

- Cumulative grade points average (CGPA) or marks percentage.

- The reputation of the college.

- Consistency in his lower degrees.

- Whether to take the education loan or not.

- Cleared education loan dues.

- Employment details.

- Salary.

- Year of experience.

- Got any onsite opportunities.

- Average job change duration.

The company(XYZ) use’s these kinds of details to calculate credit score of a customer. The process of calculating the credit score from the customer’s details is expensive. To reduce the cost of predicting credit score, they realized that the customers with similar background details are getting a similar credit score.

So, they decided to use already available data of customers and predict the credit score using it by comparing it with similar data. These kinds of problems are handled by the k-nearest neighbor classifier for finding the similar kind of customers.

K-nearest neighbor (Knn) algorithm pseudocode:

Let (Xi, Ci) where i = 1, 2……., n be data points. Xi denotes feature values & Ci denotes labels for Xifor each i.

Assuming the number of classes as ‘c’

Ci ∈ {1, 2, 3, ……, c} for all values of i

Assuming the number of classes as ‘c’

Ci ∈ {1, 2, 3, ……, c} for all values of i

Let x be a point for which label is not known, and we would like to find the label class using k-nearest neighbor algorithms.

Knn Algorithm Pseudocode:

- Calculate “d(x, xi)” i =1, 2, ….., n; where d denotes the Euclidean distance between the points.

- Arrange the calculated n Euclidean distances in non-decreasing order.

- Let k be a +ve integer, take the first k distances from this sorted list.

- Find those k-points corresponding to these k-distances.

- Let ki denotes the number of points belonging to the ith class among k points i.e. k ≥ 0

- If ki >kj ∀ i ≠ j then put x in class i.

For data science, beginners the about pseudocode will be hard to understand. So let’s understand the knn algorithm using an example.

K-Nearest neighbor algorithm example

K- Nearest neighbor algorithm example

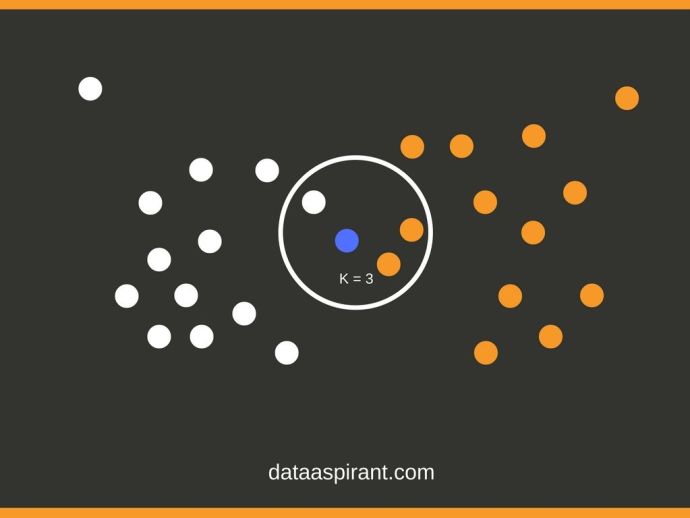

Let’s consider the above image where we have two different target classes white and orangecircles. We have total 26 training samples. Now we would like to predict the target class for the blue circle. Considering k value as three, we need to calculate the similarity distance using similarity measures like Euclidean distance.

If the similarity score is less which means the classes are close. In the image, we have calculated distance and placed the less distance circles to blue circle inside the Big circle.

To learn about different similarity measures, please check out

With the above example, you got some idea about the process of the knn algorithm. Now read the next paragraph to understand the knn algorithm in technical words.

Let’s consider a setup with “n” training samples, where xi is the training data point. The training data points are categorized into “c” classes. Using KNN, we want to predict class for the new data point. So, the first step is to calculate the distance(Euclidean) between the new data point and all the training data points.

Next step is to arrange all the distances in non-decreasing order. Assuming a positive value of “K” and filtering “K” least values from the sorted list. Now, we have K top distances. Let ki denotes no. of points belonging to the ith class among k points. If ki >kj ∀i ≠ j then put x in class i.

Nearest Neighbor Algorithm:

Nearest neighbor is a special case of k-nearest neighbor class. Where k value is 1 (k = 1). In this case, new data point target class will be assigned to the 1st closest neighbor.

How to choose the value of K?

Selecting the value of K in K-nearest neighbor is the most critical problem. A small value of K means that noise will have a higher influence on the result i.e., the probability of overfitting is very high. A large value of K makes it computationally expensive and defeats the basic idea behind KNN (that points that are near might have similar classes ). A simple approach to select k is k = n^(1/2).

To optimize the results, we can use Cross Validation. Using the cross-validation technique, we can test KNN algorithm with different values of K. The model which gives good accuracy can be considered to be an optimal choice.

It depends on individual cases, at times best process is to run through each possible value of k and test our result.

Condensed Nearest Neighbor Data Reduction Rule:

Working on a big dataset can be an expensive task. Using the condensed nearest neighbor rule, we can clean our data and can sort the important observations out of it. This process can reduce the execution time of the machine learning algorithm. But there is a chance of accuracy reduction.

The steps to condense is to divide data points into these:

- Outliers: Observations that lie at an abnormal distance from all the data points. Most of these are extreme values. Removing these observations will increase the accuracy of the model.

- Prototypes: Minimum points in training set required to recognize non-outlier points.

- Absorbed points: These are points that are correctly identified to be non-outlier points.

K-Nearest Neighbor case study

Breast cancer diagnosis using k-nearest neighbor (Knn) algorithm

To diagnose Breast Cancer, the doctor uses his experience by analyzing details provided by a) Patient’s Past Medical History b) Reports of all the tests performed. At times, it becomes difficult to diagnose cancer even for experienced doctors. Since the information provided by the patient might be unclear and insufficient.

Breast cancer dataset details

Breast cancer database was obtained from the University of Wisconsin Hospitals, Madison from Dr. William H. Wolberg. It contains 699 samples with 10 attributes.

The Main objective is to predict whether it’s benign or malignant. Many Scientists worked on this dataset and predicted class using different algorithms.

According to a research paper of M.Sarkar & T.Y. Leong, Department of Computer Science, The National University Singapore

“Conceptually and implementation-wise, the K-nearest neighbors algorithm is simpler than other techniques that have been applied to this problem. In addition, the K-nearest neighbors algorithm produces the overall classification result 1.17% better than the best result known for this problem.”

This is a classification problem and most of the classification techniques can be classified into the following three groups:

- Parametric

- Semiparametric

- Non-Parametric

Parametric & Semiparametric classifiers need specific information about the structure of data in training set. It is difficult to fulfill this requirement in many cases. So, non-parametric classifier like KNN was considered.

Data was randomly split into training, cross-validation & testing data. Experimentation was done with the value of K from K = 1 to 15. With KNN algorithm, the classification result of test set fluctuates between 99.12% and 98.02%. The best performance was obtained when K is 1.

Advantages of K-nearest neighbors algorithm

- Knn is simple to implement.

- Knn executes quickly for small training data sets.

- performance asymptotically approaches the performance of the Bayes Classifier.

- Don’t need any prior knowledge about the structure of data in the training set.

- No retraining is required if the new training pattern is added to the existing training set.

Limitation to K-nearest neighbors algorithm

- When the training set is large, it may take a lot of space.

- For every test data, the distance should be computed between test data and all the training data. Thus a lot of time may be needed for the testing.

References

[2] Sarkar M, Leong TY. Application of K-nearest neighbors algorithm on breast cancer diagnosis problem

Comments

Post a Comment